In our previous blog, CISO's Guide: Introduction to Machine Learning for Cyber Security, we discussed why machine learning is one of the most powerful tools at a CISO's disposal to more quickly detect today's insidious cyberattacks. In this blog, we'll dive into the principles of machine learning so you can more effectively introduce machine learning into your security operations.

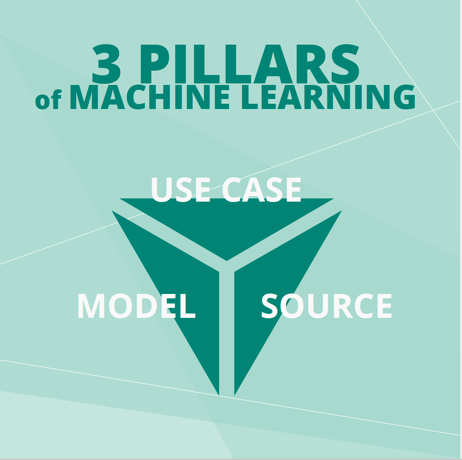

Three Pillars of Machine Learning

To properly plan for the successful use of machine learning, it's important to move beyond the standard marketing claims and descriptions to understand the key technical concepts.

The three pillars of machine learning are:

- Use Case – What type of attack am I trying to find and at what stage in the kill chain will I be looking?

- Model – Given what I am looking for, what model (algorithm) is most appropriate?

- Source/Data – Now that I have the model, what data—the raw feedstock, if you will—is best suited to provide the model with the most meaningful and actionable information? For security machine learning, the input can be packets, flows, logs, alerts and text, such as performance reviews and external threat intelligence.

Once these variables are defined, the next question is: Can the model scale with the amount of data and the scope of the use case? Machine learning solutions are based on a set of well-researched and well-documented mathematical models. While the basic algorithms and processes are not secret, how they are used and implemented in an end-to-end system will determine the value they deliver.

What's the Difference: Unsupervised vs. Supervised Machine Learning

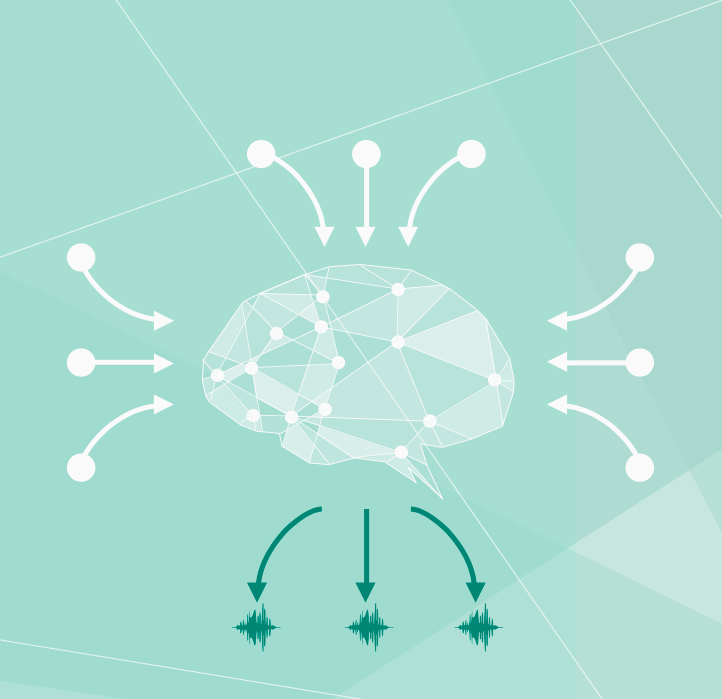

The usage and implementation of machine learning—the "how"—generally fall into two main categories: unsupervised and supervised machine learning.

Unsupervised machine learning – To more effectively detect the behavioral changes missed by alternative security strategies such as pattern matching or rules, machine learning requires a backdrop of "normal" so it can then detect deviations from that norm. Once a baseline is determined and in place, abnormal behaviors are then flagged as possible indicators of an attack in progress.

By looking at each user's demographic and IT activity profile (such as which organization am I in, who is my boss, what systems and applications do I access, and when do I access them), an unsupervised machine learning model automatically builds a baseline of normal activity.

For unsupervised machine learning models, no rules are created to deliver results. The model may take a period of time (say, 10 to 14 days) to build up a reliable baseline.

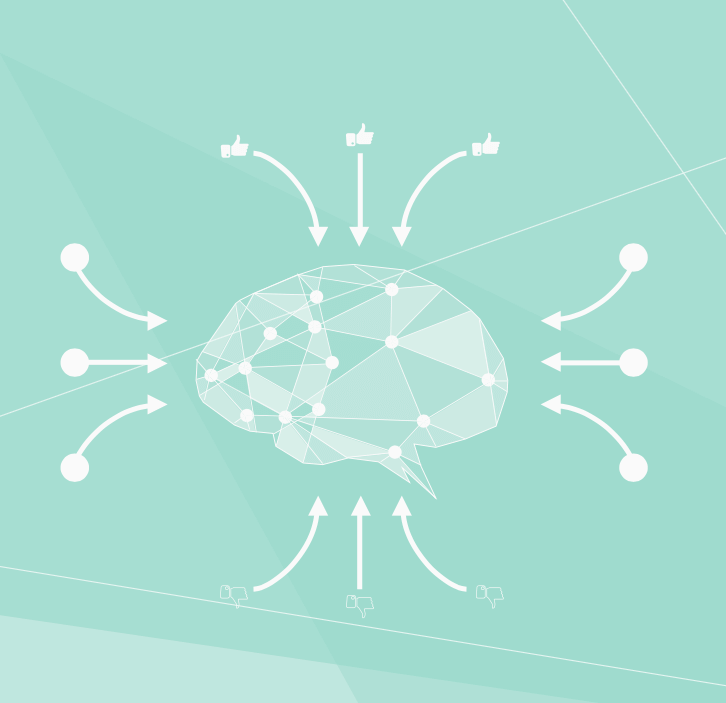

Supervised machine learning – Once an anomaly (a change in behavior) is discovered, how do you determine if it is part of an attack? That's where supervised machine learning comes in. If unsupervised models are self-learning, supervised models must be "taught" to detect a specific condition. Researchers identify attack methodologies and collect datasets to "train" algorithms to recognize specific attack elements. Once trained, these algorithms can then predict "good" or "bad" on new, unseen datasets.

Consider a bag of marbles that are either all white, all black, or shades of gray. Think of it as a proxy for whether something is "good" or "bad" or "inconclusive." The closer a marble is to white, the more likely it's "good" and marbles closer to black are likely "bad." Using supervised machine learning, a data scientist can take a bag of marbles and "train" the model by categorizing each marble as either "white" or "black," and even categorize marbles that are shades of gray.

Once the model is trained, it can look at new marbles and put them in the "black" or "white" bucket, with a probability assigned to designate how confident it is in the result. For gray marbles that are mostly black or mostly white, the confidence will be high. For marbles in the middle where they can be put in either category, confidence will be low.

An example in the security domain would be a supervised machine learning model looking a command-and-control connection to an attacker from a compromised system. The data for this model comes from DNS requests. The model is trained by exposing it to a large dataset of "good" (such as Alexa top 1 million domains) and "bad" domains (such as from different botnets), enabling it to automatically find the equivalent of "black" marbles in standard DNS data. For example, google.com is a good domain, while ufclo9da.e6ytwx-sf2l.com would be a bad domain.

Learn More

In our next blog, explore how to get started machine learning and user entity behavioral analytics.

Ready to learn more? Download the CISO's Guide to Machine Learning and User Entity Behavioral Analytics e-book now.