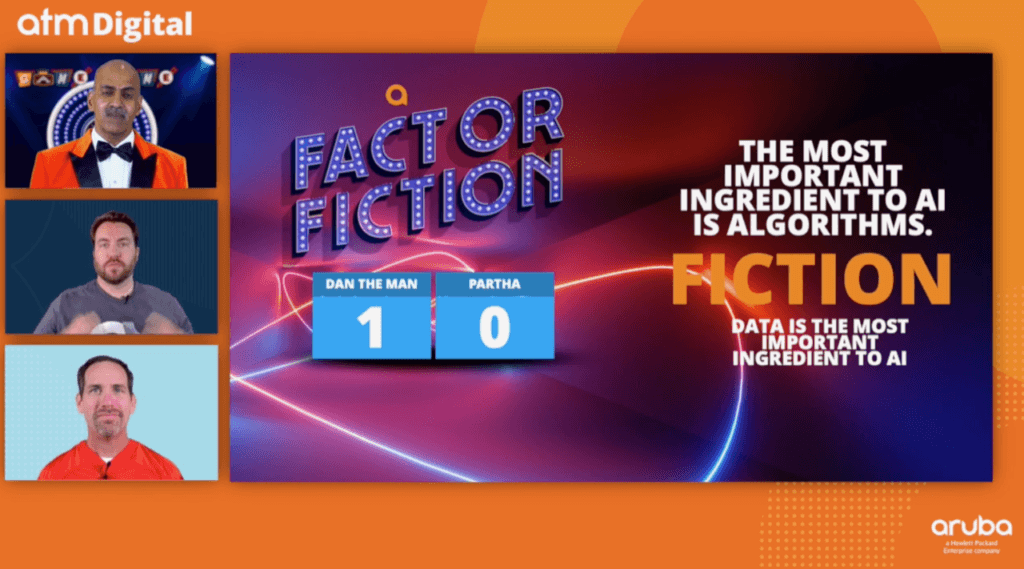

During the Aruba Atmosphere Digital technology keynote, Partha Narasimhan (@AirheadOne) did a fun game show asking all sorts of questions to his contestants, which I think might have secretly been Aruba employees. One of the fun things that stuck out in my head from that discussion was how they talked about AI and how it relates to Aruba's new ESP solution. Specifically, I wanted to call attention to this slide that happens about nine minutes into the talk:

It couldn't have been any more telling if Aruba had hired a skywriter to make the message appear over Silicon Valley. The most important part of the AI equation is not the algorithm that is written to predict the data. Instead, it is the data that is used to write and refine the algorithm.

The Scientific Method

When scientists are doing experiments and using the scientific method, they have a process they use:

- Make a hypothesis.

- Collect data.

- Observe the data.

- Determine if the data supports the hypothesis.

It's simple and straightforward. Notice that at no point does the data change. It's collected and observed. It is assumed to be valid at all times. But the hypothesis isn't. The hypothesis is just a guess. An observation based on the current data. If the data supports the hypothesis we keep testing until all of the data we can find supports the hypothesis over and over again. If the data doesn't support the hypothesis, we change it and see if the data supports the new educated guess.

The process doesn't change the data in any way. We don't change the data to support our educated guess. Likewise, when we're building an algorithm for an AI solution, we don't discard or modify data just because it doesn't fit the algorithm. The data is the most important part of what we do. The data is exactly what it is. There is no second-guessing it. We build the AI algorithm for the task at hand based on the data that has been collected. We wouldn't ignore or choose different data just because we didn't want something to show up in the algorithm.

Building Better AI Through Data

So, if data is the most important part of your AI platform, you'd better be collecting as much of it as possible, right? The only way that your AI algorithms are going to be anywhere remotely relatable is if they have all of the data they need to make everything work correctly. You have to account for every data point and collect as much as you can before you start processing things.

One of the biggest failures in the building of an algorithm is when people start with bad data or discard data that doesn't look "right" when trying to train the system to build the proper algorithm. If you manually select out the data that you need to start training things, then you're losing valuable input that can help you figure out some of the reasons why things are flawed and expose patterns of behavior.

Let's take a quick example. If you're feeding an AI system a series of logs for users logging in to the system, wouldn't you want to input all of the logs? You'd want to see any time anyone was connecting. Not just the stuff during work hours. You want to see the people that show up early and stay late. You want to know how utilized your systems are so you can plan maintenance windows. And you absolutely want to know when someone is accessing your system at odd hours in the middle of the night. Those patterns are exposed only when all of the data is fed into the model.

More appropriately, for technologies like networking and wireless, you need all of the data to build the algorithms that are going to be used to predict usage patterns and help troubleshoot. If you exclude data from a switch because it looks out of the norm, you're explicitly ignoring something that can end up helping you. Those aberrant data points aren't bad. They're very, very good. Because they refine the model. If something works 50% of the time, it's not good. If something works 99% of the time, it's better. If your model can only predict behavior 50% of the time, it needs refinement. But if it's 99% accurate, it's amazing. It only gets there if you include all of the data.

If you want to collect all of that data, you need the right tools. That's where Aruba can help you. With their wide variety of access points and edge computing devices, in addition to the new UXI G-Series sensors that build on technology from the Cape Networks acquisition, you can collect the data that you are missing from all points in the network. You can refine the algorithms used across Aruba ESP to show you all of the details that you've been missing. And because the data is the most important part of the equation, you're getting the right data when you need it and using it in the ways that it needs to be consumed.

Partha and I agree. The data is the most important part of what you do. Collect it, analyze it, and use it. Don't discard it and don't think that your algorithm is perfect. You never know when a few extra calibrations are all you need to outperform your wildest expectations.

Watch other sessions about AIOps, Unified Infrastructure and Zero Trust Security from ATM Digital.