The Aruba Technical Marketing team is constantly testing and proving (or disproving) a number of hypotheses in our Sunnyvale PoC Lab test environment. I think we are all aware by now (and if you aren't aware, where have you been?) that the BYOD phenomenon has led to an explosion in the number and types of mobile devices on the network. Like most vendors, we are all thinking about this Pandora's Box of new questions:

- What is the impact of these devices on per-client and aggregate throughput on the network?

- What is the maximum performance we can we expect when mixing tablets, smartphones, and enterprise laptops together – 1 x 1, 2 x 2, and 3 x 3 clients capable of supporting different MCS rates 1-23 and running different OS types and form factors?

- As we slowly ramp the number of devices, how does the aggregate performance stand up to this increase in contention for the shared medium – does it maintain the aggregate performance, or fall off the cliff like a "waterfall graph"?

It's enough to make you want to lay down!

One hypothesis is proving that Aruba's Adaptive Radio Management allows for the highest level of performance in the industry. We chose to go head-to-head (for now) with Cisco's flagship 3600 AP with the latest shipping software from both vendors.

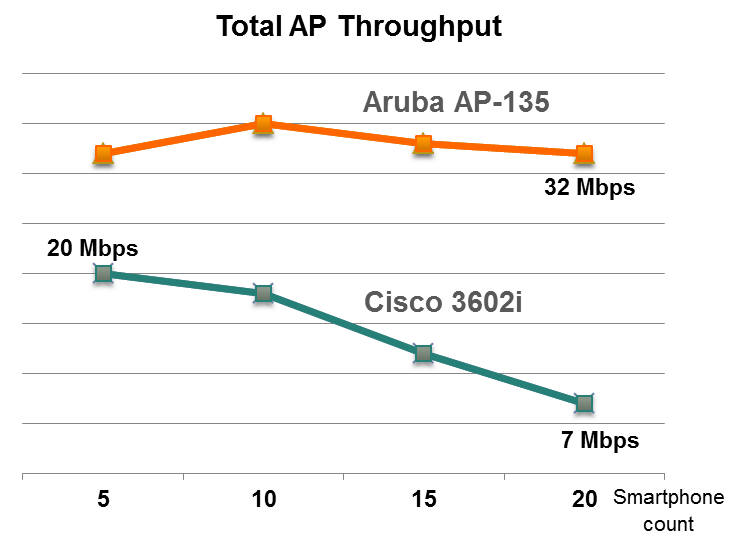

Here is what we found; when we just looked at smartphones on a single 2.4 GHz radio. Aruba was able to maintain the aggregate throughput for 5, 10, 15, and 20 smartphones, while Cisco showed a steep drop off as more clients were added. As the number of mobile devices increases, the maximum bandwidth per user will decrease (as there is more contention), but in a WLAN optimized for density the aggregate throughput should remain roughly the same – Aruba's ARM achieves this.

We noted that Cisco performed better in the downstream-only test with 20 smartphone clients than with upstream only or bidirectional traffic added to each device. In the upstream-only case, Aruba's aggregate was 8x what Cisco could achieve.

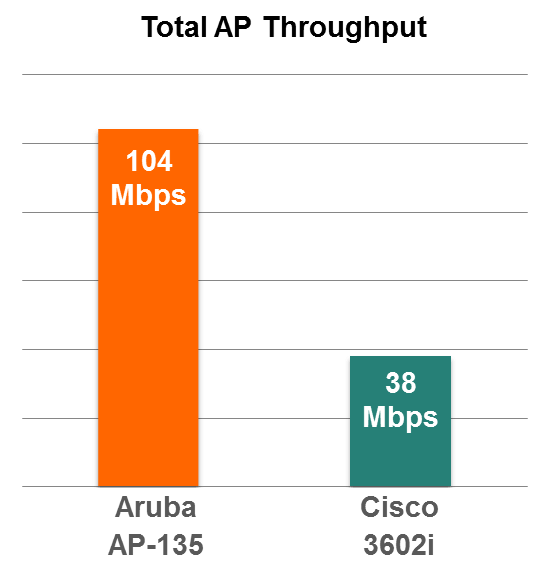

The next result was even more dramatic, when we added 20 laptops and 20 tablets into the mix with the 20 smartphones, the aggregate throughput for Aruba was 3x what Cisco was able to provide.

The smartphones in this 60-client test were on the 2.4 GHz band while the remaining clients were in 5 GHz, but for some reason the smartphones only achieved 2 Mbps of aggregate throughput for Cisco to Aruba's 33 Mbps, even while isolated on a separate radio. Perhaps there is some cross-radio interference in the Cisco AP leading to this reduction over the smartphone-only test case.

In a separate set of tests, we set out to determine which vendor had the better high-availability scenario. The key theme here is resilient connectivity for mobile devices and real-time applications – users care about network downtime in case of an outage (controller failure in this case), but they also care about their enterprise applications. The goal is for the high availability architecture to minimize downtime in larger scale networks and also offer application assurance. Questions we set out to answer included:

- How fast does the network recover in the event of a failure event?

- In the event of a failure, what is the impact on the default client behavior – do clients come back automatically?

- In the event of a failure, what is the impact on some common enterprise applications – how robust and resilient are they to a potential network outage?

For the first test, we used 12 APs and tested a controller failure by pulling the cable on the LAN interface of the primary controller. We found that Aruba was 7x faster than Cisco in bringing the entire network of 12 APs back online.

We then took the same 60 client BYOD testbed associated to the 12 APs, and we set out to see how many clients remained on the network after the outage vs. how many were disconnected. We found that all 60 clients were back online after the controller failure event with Aruba but 10 were stranded with Cisco and required manual intervention to get back on the network.

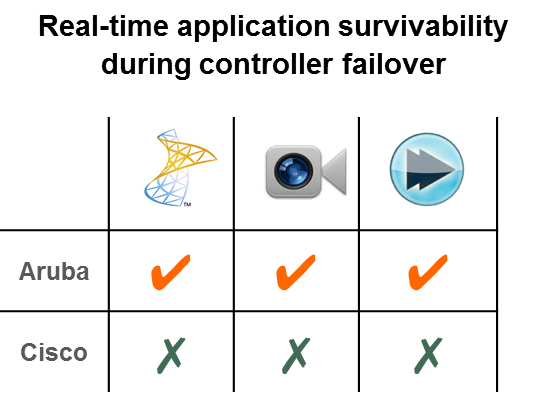

Now here is the critical part - The user experience! It is not just about connectivity but also about applications and application assurance. We tried the same test as before, only running real applications at the same time. Aruba was able to provide application assurance for Apple Facetime, Microsoft Lync (Aruba is the only qualified WLAN on MS Lync certification), and video streaming, while Cisco was not.

Finally for our "kitchen sink test", we had 20 video calls, 35 video streaming sessions, and 5 file downloads active across all 60 clients. Aruba did not drop a single session across multiple runs, while Cisco dropped all 60.

Now I know what you are saying "Well that's an Aruba test, so what a surprise that you came out on top!". That's true, but here is the real deal, and no bull. We publish our testing setup, so we are not hiding behind a random 'impartial' 3rd party. How many people do that these days?

If you are interested, here are a few items you might like:

- "Campus Redundancy Models" is our latest validated reference design guide is posted to our VRD landing page (here).

- Airbytes 2 video showing these results in a less 'wordy' way - see the landing page for performance (here).

Look out for more testing updates from us in the future. Happy Wi-Fi-ing.